When people communicate with each other, they have a specific language, an expression and an emotional feeling towards each other that allows them to speak and understand. Words play a crucial role in communication and have to be used and understood correctly.

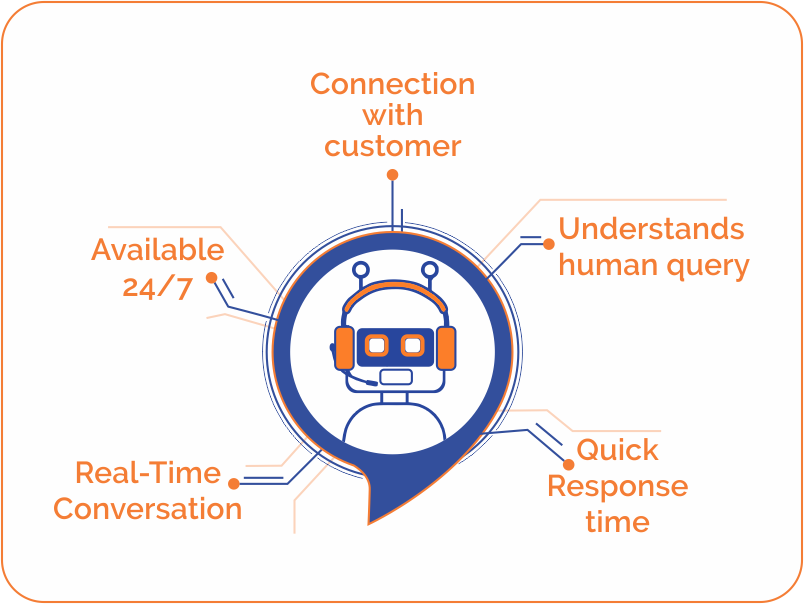

Customers seek answers for various queries, and in doing so, they expect businesses to respond as quickly as possible and with right solutions. But can a human work 24/7 and respond to millions of queries pouring in from different parts of the globe in multiple languages? Maybe YES! Or Maybe NO!

To facilitate client conversations round the clock and to automate the client facing business processes, chatbots are certainly helping organizations.

Chatbots were initially introduced in the 1950s and 1960s but got prominence in 1980s. The primary role of a chatbot is to answer a question.

With advances in AI/NLP technologies, today chatbots can understand human language, emotions and sentiments.

A well-trained chatbot can speak to humans as humans and can provide instant response – all  the time being factual and not subjective as it typically happens in a human to human conversation.

the time being factual and not subjective as it typically happens in a human to human conversation.

How is it possible for a chatbot to understand different languages that human speak? The answer to this is Natural Language Processing (NLP) technology that allows chatbots or machines to make the right sense of language.

AI has outperformed human capabilities in several fields, and now NLP technology – specifically with Machine Learning models – are being used to understand humans better and the way they work.

NLP allows machines to handle customer support conversations, creating more accurate and quick responses. Thus, the NLP technology focuses on to build language-based responses that can be given to humans when they ask questions.

NLP allows machines to handle customer support conversations, creating more accurate and quick responses. Thus, the NLP technology focuses on to build language-based responses that can be given to humans when they ask questions.

The core emphasis of NLP is to build a system where it can define symbols, relations, text, audio, and contextual information that can be further used by a computer algorithm to apply language interpretation and create meaningful conversations.

NLP has proved to be beneficial in several ways, but it comes with certain limitations too. In this article, we share a few limitations of NLP.

Limitations of NLP:

Maintaining context over a time period

Chatbots like Siri and Alexa can answer a range of questions that are most commonly asked by users. But these chatbots do not remember these conversations over time. They can’t relate to current context based on previous conversations.

The chatbots can’t handle deep conversations. They can’t dig deep and ask in-depth questions to understand user intent. They are programmed in a way that they speak only the things that they have been taught.

Classifying the information and routing it to the appropriate category and passing on to a human agent can be error prone at times as they may not be fully trained to extract contextual data from evolving jargon.

Depends on the quality of input data

AI entirely relies on data, and if the data is poor, then the output it gives will also be found wanting. The phrase “garbage in, garbage out” fits very well to explain this limitation!

Understaning human emotions and words with precision

A machine can’t relate to human emotions and sentiments. Human agents are good at understanding human emotions and can engage in deeper conversations with customers.

Currently, the state-of-the-art NLP driven Question/Answering systems on which chatbots are built deliver a performance just above 90%. But, they still fall short of human expectations. For e.g., if the chatbot can not understand a word that a human speaks, it asks him to pose the question differently – so that it can better understand the intent. In other scenarios, it might give some random answers. And, both these situations do not facilitate a delightful customer experience.

Needs high computing resources

NLP requires considerable computing resources, including large amounts of memory to analyze volumes of data.

Ontology is defined as specification of how to represent objects, concepts, and other entities in a specific system and also defining the relationship between them. A globally common ontology that can encapsulate knowledge from assorted corpus is found wanting in NLP.

Facilitates limited understanding for practical use

While open Question/Answering systems tend to encapsulate world knowledge, nevertheless they do it for only a fraction of it.

For e.g., Google can only provide answers to what has been published online in billions of websites. Though Google has extended it to include Books, still it does not cover all of the world’s knowledge.

Also, the answers provided by these open QA systems are generic and needs to be further questioned/analyzed to derive practical usage in the real world.

Even the data used for academic and research purpose requires longer days to be analyzed.

Need for an effective information retrieval systems

Information extraction and retrieval systems are as good and effective as their ability to represent knowledge – with well defined relationships between entities (hierarchical or otherwise).

Despite the above limitations of NLP and the chatbots that run on this technology, these platforms are helping improve our material quality of lives.

Further, the Deep Learning based QA systems that are at the heart of any chatbots have the self-learning ability – the more we use them, the more they learn.

As Deep Learning makes advances and finds it’s way into NLP, we can expect to have chatbots that provide meaningful conversations in any language – as DL can help do real-time machine translation, sentiment analysis, text classification and image recognition.